A new type of recruitment market is emerging.

A few weeks ago, Payman AI released an AI service (AI that Pays Humans) that is currently in closed beta testing. The service explains that after the client makes a payment to the account of Payman's AI agent, they can grant access to the AI to perform tasks in the real world that only humans can currently do.

For example, the 'Collecting 10 Reviews for Customer Management' project shows the flow where the AI specifies and organizes the minimum components for the requested reviews and shares them on the platform. Individuals interested in this request then go out into the real world to collect and submit reviews. The AI then determines the appropriateness of the reviews and pays each individual the allocated fee.

This approach may seem like a simple AI application for now. However, it's noteworthy because it offers a clue to address the most common and difficult bottleneck in AI adoption – the issue of ‘trust.’

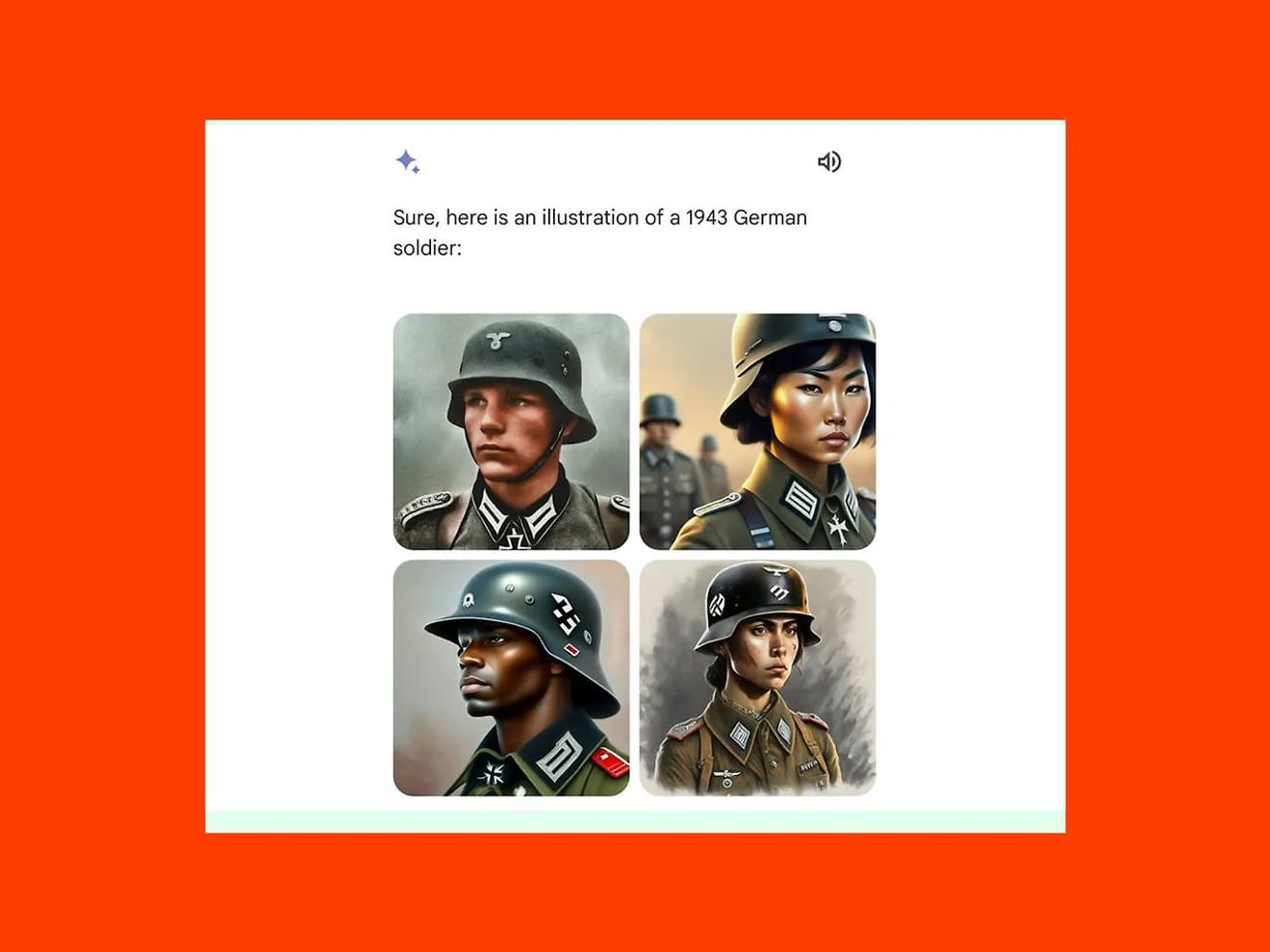

One of the primary concerns about AI adoption is whether it can prevent situations where biased tasks are performed due to the learning of incorrect data patterns. In February, Google's generative AI model, Gemini, faced criticism and temporary suspension after it generated images of East Asian women and Black men instead of white men for 1943 German military images. This was a result of excessive emphasis on diversity over historical fact verification.

However, as illustrated by the Payman example, including a human review and participation stage in the middle process of the assignment can help identify errors and enhance accountability throughout the project, thereby boosting the credibility of the service. In essence, the general consensus from this case is that AI employs humans to ‘complete tasks beyond AI’s capabilities.’

However, as this approach becomes more widespread, it's important to note that humans will need to adapt to new criteria for showcasing their skills and experience.

Firstly, when AI acts as the employer, the source of trust shifts to the accuracy of algorithms and the reliability of data. The level of trust is determined by how AI evaluates and selects individuals. Therefore, humans applying for projects may demand external standards to ensure that the relevant algorithms are transparent and fair, and that the data is accurate and unbiased.

In traditional employment markets, trust is built through direct interpersonal relationships, where testimonials and recommendations influence hiring decisions. However, in AI-driven employment platforms, only the internal reputation system can serve as the source of trust. This suggests that user feedback and reviews left by others through collaboration and connections will become more crucial than ever before. This implies that the possibility of situations similar to the 'star rating terrorism' on Baemin (a food delivery app) in the real world, causing severe harm to self-employed individuals, could also occur in the employment environment.

For a clearer understanding, let's consider the implications for Job Titles.

In the employment context, Job Titles not only represent job roles but also serve as crucial symbols for candidates to demonstrate their value and skills. In AI-based employment models, Job Titles can be used as a key indicator for assessing a candidate's role. Similar to how we've become accustomed to keyword-based technical criteria determining the outcome of document screening in large company hiring processes.

In the mid-1990s, some human-centric researchers reportedly questioned the title 'Understander' on their business cards. Considering the clear distinctions in roles required across industries, such as AI researchers and UX designers, it is evident that the definition of Job Titles should be based on self-awareness and a shared understanding of market needs to determine the specific roles individuals have played in a particular field.

This raises the question of how well the Job Titles defined by humans as candidates in the future will align with the criteria used by AI to assess the market and industry in the context of an AI-driven employment environment. It also prompts us to consider how to standardize various assessment criteria. Perhaps now is the time to delve deeper into the concept of 'trust' in the evolving job market.

References

Comments0