A recent study released by researchers at Stanford University on October 18th reveals just how deep and potentially risky the secrets of GPT-4 and other cutting-edge AI systems are.

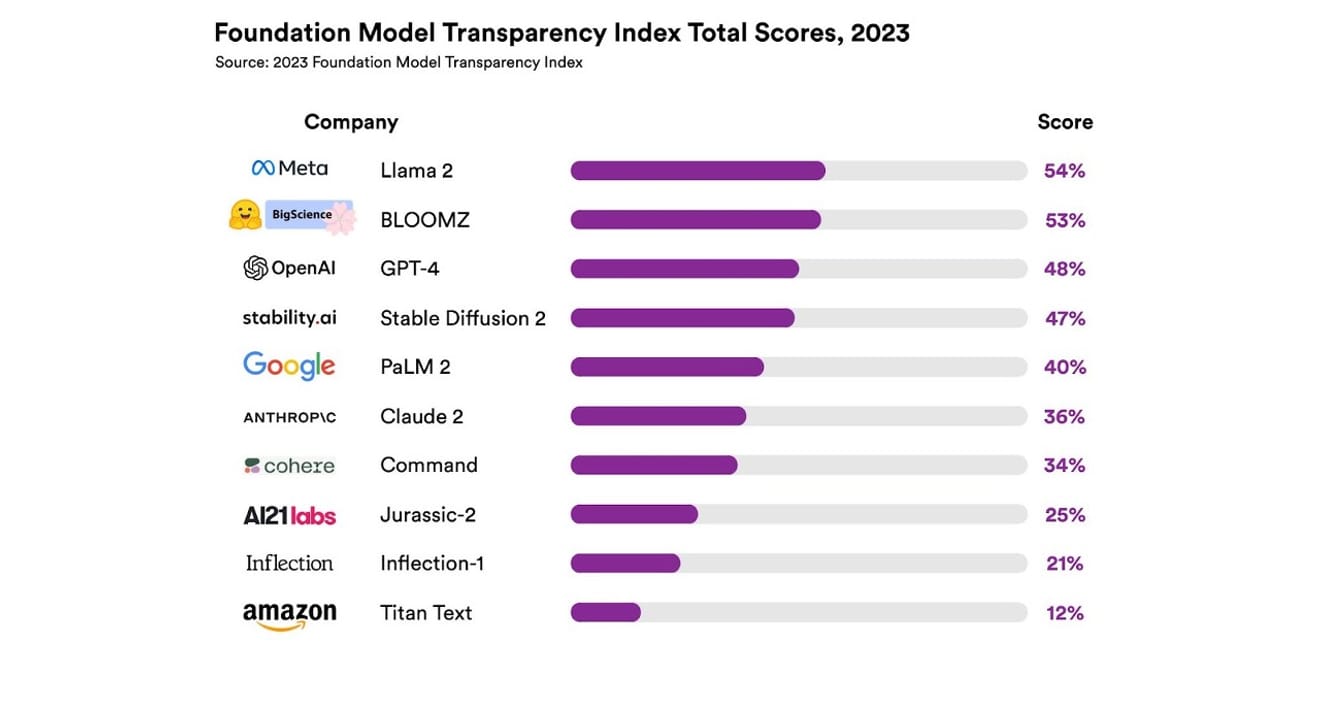

Introducing The Foundation Model Transparency Index, Stanford University

They examined a total of 10 different AI systems, most of which were large language models (LLMs) like those used in ChatGPT and other chatbots. This included widely used commercial models such as OpenAI's GPT-4, Google's PaLM 2, and Amazon's Titan Text. They evaluated openness based on 13 criteria, including the level of transparency developers provided regarding the data used to train the models (including data collection and annotation methods, and whether copyrighted materials were included). They also investigated whether the hardware used to train and run the models, the software frameworks used, and the project's energy consumption were publicly disclosed.

The results showed that no AI model achieved over 54% on the transparency scale across all criteria. Overall, Amazon's Titan Text was rated the least transparent, while Meta's Llama 2 was deemed the most open. Interestingly, Llama 2, the representative of the recently highlighted open-source and closed-source model rivalry, did not disclose the data used for training, its data collection and curation methods, even though it is an open-source model. In other words, despite the growing influence of AI on our society, industry-related opacity is a widespread and persistent phenomenon.

Thissuggests that the AI industry may soon become a profit-driven field rather than one focused on scientific advancement, potentially leading to a monopolistic future dominated by specific companies..

Eric Lee/Bloomberg via Getty Images

OpenAI CEO Sam Altman has already met with policymakers worldwide to actively explain this unfamiliar and novel intelligence and offer assistance in developing concrete regulations. While he generally supports the idea of an international body overseeing AI, he also believes that some limited rules, such as banning all copyrighted materials from datasets, could pose unfair obstacles. This clearly demonstrates how the 'openness' embodied in the name OpenAI has deviated from the radical transparency it initially advocated.

However, the results of this Stanford report also highlight that there's no need for companies to keep their models so secret for the sake of competition. This is because the findings serve as an indicator of the underperformance of nearly all companies. For instance, no company reportedly provides statistics on how many users rely on their models or the geographic regions or market segments where their models are used.

Within organizations that adhere to open-source principles, there's a saying: 'Given enough eyeballs, all bugs are shallow.' (Linus's Law). Raw numbers aid in identifying and resolving issues.

However,open-source practices also tend to diminish the social status and recognition of both internal and external entities within the open organization. Therefore, an unconditional emphasis on it is largely meaningless. Instead of dwelling on whether a model is open or closed, it might be a better choice to focus the discussion on gradually expanding external access to the ‘data’ underlying AI models..

Scientific advancement necessitates ensuring reproducibility of specific research findings. Without concretizing ways to guarantee transparency towards the key components of each model's creation, the industry is likely to remain in a closed and stagnant monopolistic situation. We must remember that this is a crucial priority, especially considering how rapidly AI technology is permeating various industries now and in the future.

It has become crucial for journalists and scientists to understand data, and transparency is a prerequisite for policymakers' planned policy efforts. Transparency is also crucial for the public, as end-users of AI systems, who could be perpetrators or victims of potential issues related to intellectual property, energy usage, and bias. Sam Altman argues that the risk of human extinction from AI should be a global priority, akin to societal risks like pandemics or nuclear war. However, we must not forget that the survival of our society that maintains a healthy relationship with evolving AI is a prerequisite before reaching such a perilous situation.

*This article is the original content of a named column published in the Electronic Times on October 23, 2023.

References

Comments0