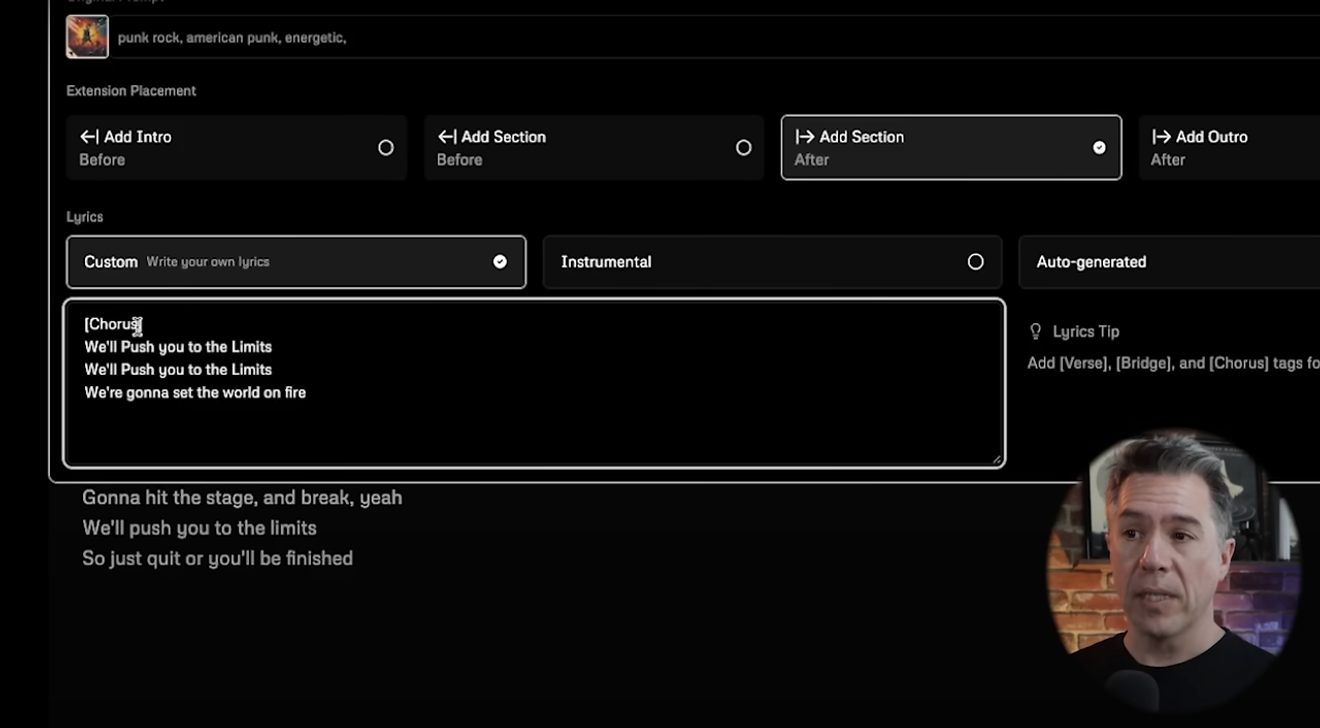

Last Wednesday, Udio, an AI music generation service capable of creating music through text prompts and even adding elements like vocals and lyrics, was officially launched. Following several months of private beta testing, the service is the brainchild of a team of former Google DeepMind employees. It garnered significant attention after securing $10 million in seed funding from prominent investors and celebrities, including musicians will.i.am and Common.

What's interesting is that reviewers who had the opportunity to use Udio in advance, with the support of the Udio team, consistently reported experiencing AI-generated music of a very high caliber, particularly in terms of its live performance feel and vocal harmonies. Furthermore, articles highlighting the service's ability to simplify music production, thereby potentially allowing anyone to become a composer, are readily available. These articles anticipate a revolutionary shift in how music is created and consumed in the future.

These advancements represent yet another example of the AI trend aimed at democratizing creative expression, making artistic creation tools accessible to a wider audience. However, alongside discussions about the potential arising from this efficiency and user-friendly interface, there are also questions that must be considered.

Can these tools reproduce the intricate meaning and depth of emotion that human creators infuse into their work? This question is crucial for understanding both the potential and limitations of AI in the creative industries moving forward.

Musician HAINBACH, through his YouTube content 'How Textures Tell a Story,' guides viewers on a journey through a tranquil park and nature filled with grass and trees. He demonstrates how these uncontrollable electronic sounds can be experienced with different meanings and narratives depending on the location where the listener finds themselves. For him, the Lyra-8 is an instrument that embodies unique narratives tailored to the sensory and cultural contexts of sound.

Manufacturer Soma describes the Lyra-8 as an 'organismic' synthesizer due to its capacitive touch surface. Instead of adhering to a conventional keyboard layout, it interacts with the user's physical attributes like touch sensitivity, humidity, and temperature. This creates a more intimate and physically immersive environment for the user, enhancing the depth of interaction and making the sound creation experience highly personal and exploratory. This serves as an example of a rich and multi-dimensional sonic experience that AI has yet to convincingly replicate.

The world is already overflowing with pings, beeps, and music snippets. We spend most of our time immersed in a saturated state within the screens of our monitors and devices, bombarded with sounds lacking depth or contextual relevance. Therefore, the news of yet another AI music generation service like Udio is both exciting and concerning. Fundamentally, I believe AI technologies like Udio should not only mimic human musical abilities but also aim to understand and reflect the complex emotional and cultural structures that underpin human creativity.

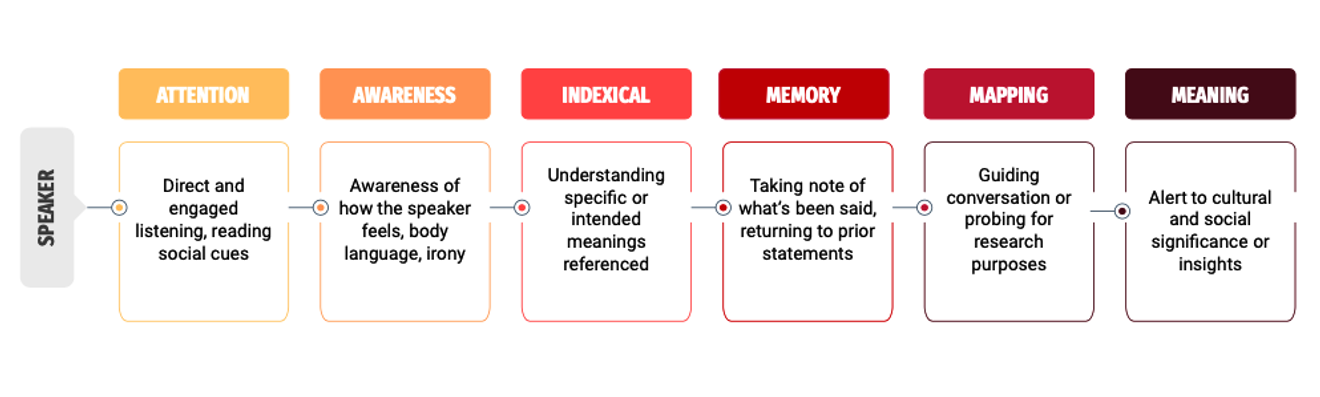

In his paper, 'The Sound of Friction,' cultural anthropologist Michael Powell emphasizes that 'listening' is a highly effective technique for understanding human experience and cultural interaction. His research provides several insights that AI music service developers might consider:

Firstly, an interactive feedback loop might be appropriate. By applying the iterative process of ethnographic interviews, we can consider integrating a system where AI poses follow-up questions in response to basic text inputs, or refines and adjusts the generated music based on the user's initial responses.

Secondly, they could attempt to provide personalized results by considering the subtle analysis of emotional tone or cultural textures linked to the reference within the basic input text.

Thirdly, just as ethnographers gain deeper insights as their research progresses, we could consider extending the design of the AI system to broaden its understanding of user preferences and cultural nuances through the expansion of a conversational history function.

AI like Udio represents a significant leap in making music creation more accessible. However, it also prompts us to reflect on how we can bridge the gap between the essence of creativity and the nuanced human experiences that music can offer. This dialogue between technology and tradition, innovation and depth, will define the future trajectory of music in the digital age. Therefore, considering not only how sound is created but also how it is perceived and valued within our society is perhaps the best way to slow down the inevitable arrival of an era where 'human creations' become a premium label.

References

Comments0